Navigation of mobile robotic systems in dynamic three-dimensional space

Full title of the project: Navigation of mobile robotic systems in dynamic three-dimensional space based on a complex of visual data from several multidirectional optical and laser sensors

Project number: № 22-21-20033

Principal investigator: Roman Lavrenov

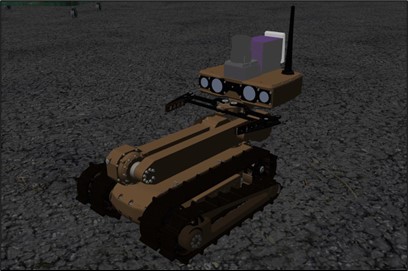

Fig. 1. Servosila Engineer robot model, used for virtual testing of algorithms in the Gazebo simulator.

About the project

There is a trend towards increasing the robotic systems’ autonomy in modern robotics. Scientific research is aimed at increasing the number of tasks that robots could perform independently, without manual operator control.

Such tasks include autonomous navigation in the absence of GPS/GLONASS signal. This task is relevant since the leading scientific laboratories and commercial companies such as Google, Tesla, and Yandex are solving it to implement autonomous functions in cars and delivery robots. The above task is also relevant when using robotic devices in hazardous conditions for people: in a fire zone, chemical or radiation contamination. In case the robot is controlled by the operator in manual mode, there is a probability of disconnection with the robot and loss of expensive equipment. Thus, the problem of robot autonomous navigation arises again.

To solve the problem of navigation in dynamic conditions in the absence of GPS/GLONASS signal, when the environment map is unknown in advance or only partially known, it is necessary to solve other problems related to computer vision. For instance, it may be problems with localization and mapping. The current trend is combining these tasks into a single task of simultaneous localization and mapping (SLAM).

Various onboard sensors are used to solve the problems of map development, localizing a robot on it, and further path planning in conditions of the absence of GPS/GLONASS signal. Examples of such onboard sensors: laser rangefinders, digital optical cameras, and stereo cameras. Laser rangefinders can be used to build a 2D map for navigation. Meanwhile, optical cameras can be used to build a 3D map in the form of point clouds or voxels. However, there are disadvantages to such approaches. While using a 2D map, other information above and below the working plane of the laser rangefinder gets lost, and when using optical devices, the measurement error is high, even in the case of perfect calibration and camera settings. The scientific novelty of the project is the development of a planning algorithm for the optimal trajectory for a mobile robot using integrated data, according to a given penalty function, that is, data from both a laser rangefinder and cameras is refined due to each other. As a consequence of the integration, a more accurate 3D map of the environment will be obtained. It will be possible to calculate a path in a more safe and optimal way for complex mobile robotic platforms by using the complexity map. It will also be relevant to solving autonomous return tasks.

During the first year of the project, the following work was carried out:

1. Review of recent developments in localization and mapping based on onboard sensor data.

2. Calculation and justification of formulas for the optimal combination of two- and three-dimensional maps using calibration information from data sources.

3. Calculation and justification of formulas for clarifying and shifting data in a three-dimensional map (point cloud), using a two-dimensional map (built from data from a laser range finder).

4. Development of software for combining two-dimensional and three-dimensional maps based on the ROS framework.

5. A number of works have been carried out to analyze the criteria for the optimality of the route on a three-dimensional map, parametrization of the criteria relative to the trajectory specified parametrically in the form of a composite spline.

6. Robot models have been prepared for virtual testing.

7. A new model of a mobile robot has been developed that will not impose additional restrictions on mapping algorithms due to the specifics of the on-board sensing or movement system.

8. Non-trivial realistic cases and environments have been prepared for testing algorithms that can provide conditions for integrating the dynamics of the behavior of scene infrastructure objects or other active agents.

During the second year of the project, the following work was carried out:

1. An extended list of criteria for assessing the optimality of a route built on the basis of a Voronoi graph and cubic B-spline has been compiled on two-dimensional and three-dimensional maps. An analysis of the criteria from the extended list was carried out, resulting in a list of the most important evaluation criteria.

2. A methodology has been developed for conducting pilot experimental studies to identify the most optimal mobile platform among the list of candidates.

3. The results of testing algorithms for integrating data from optical sensors for constructing and updating a common three-dimensional map and the software simulator implementing them in a virtual environment were obtained:

4. An algorithm has been developed for planning the optimal trajectory according to a given cost function for a mobile robot in a three-dimensional environment.

5. Software has been developed for an algorithm for planning the optimal trajectory for a mobile robot in a three-dimensional environment. The modular software was developed in the virtual environment of the Gazebo simulator based on the ROS robotic operating system in C++. A software interface has been implemented for dynamically changing the parameters of the path planning algorithm.

During the implementation of the project, 21 scientific publications were prepared, of which 18 were in publications indexed in the international scientific citation databases Scopus and Web of Science, and 3 were abstracts indexed in the RSCI. Materials have been prepared for one intellectual property.

Publications on the project topic:

[1] Magid, E., Matsuno, F., Suthakorn, J., Svinin, M., Bai, Y., Tsoy, T., Safin, R., Lavrenov, R., Zakiev, A., Nakanishi, H., Hatayama, M., Endo, T. (2022). e‑ASIA Joint Research Program: development of an international collaborative informational system for emergency situations management of food and land slide disaster areas. Artificial Life and Robotics, 27(4), pp. 613–623.

[2] Safarova, L., Abbyasov, B., Tsoy, T., Li, H., Magid, E. (2022). Comparison of Monocular ROS-based Visual SLAM Methods. Interactive Collaborative Robotics 7th International Conference, 2022.

[3] Apurin, A., Dobrokvashina, A., Abbyasov, B., Tsoy, T., Martinez-Garcia, E., Magid, E.(2022). LIRS-ArtBul: Design, modelling and construction of an omnidirectional chassis for a modular multipurpose robotic platform. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), № 13719, p. 70-80.

[4] Abdulganeev, R., Lavrenov, R., Safin, R., Bai, Y., Magid, E. (2022). Door handle detection modelling for Servosila Engineer robot in Gazebo simulator. Siberian Conference on Control and Communications, (SIBCON 2022), p. 1-4.

[5] Lychko, S., Tsoy, T., Li, H., Martinez-Garcia, E.A., Magid, E. (2022). ROS network security for a swing doors automation in a robotized hospital. Siberian Conference on Control and Communications (SIBCON 2022), p. 1-6.

[6] Dubelschikov, A., Tsoy, T., Bai, Y., Svinin, M., Magid, E. (2022). IOT cameras network based approach for improving unmanned aerial vehicle control. International Scientific Forum on Control and Engineering, p. 169-171.

[7] Safin, R., Tsoy, T., Lavrenov, R., Afanasyev, I., Magid, E. (2023). Modern Methods of Map Construction Using Optical Sensors Fusion. International Conference on Artificial Life and Robotics (ICAROB 2023), pp. 167-170.

[8] Dobrokvashina A., Lavrenov R., Magid E., Bai Y., Svinin M. How to Create a New Model of a Mobile Robot in ROS/Gazebo Environment: An Extended Tutorial // International Journal of Mechanical Engineering and Robotics Research. – 2023. – Vol. 12. – No. 4. – pp. 192-199.

[9] Iskhakova A. LIRS-Mazegen: An Easy-to-Use Blender Extension for Modeling Maze-Like Environments for Gazebo Simulator / B. Abbyasov, T. Tsoy, T. Mironchuk, M. Svinin, E. Magid // Frontiers in Robotics and Electromechanics. – 2023. – Vol. 329. – pp. 147-161.

[10] Mustafin M., Chebotareva E., Li H., Magid E. Experimental Validation of an Interface for a Human-Robot Interaction Within a Collaborative Task // International Conference on Interactive Collaborative Robotics. – Cham : Springer Nature Switzerland, 2023. – pp. 23-35.

[11] Apurin A., Abbyasov B., Martinez-Garcia E. A., Magid E. Comparison of ROS Local Planners for a Holonomic Robot in Gazebo Simulator // International Conference on Interactive Collaborative Robotics. – Cham : Springer Nature Switzerland, 2023. – pp. 116-126.

[12] Eryomin A., Safin R., Tsoy T., Lavrenov R., Magid E. Optical Sensors Fusion Approaches for Map Construction: A Review of Recent Studies // Journal of Robotics, Networking and Artificial Life. – 2023. – Vol. 10(2). – pp. 127-130.

[13] Dubelschikov A., Tsoy T., Li H., Magid E. IoT Cameras Network Based Motion Tracking for an Unmanned Aerial Vehicle Control Interface // 7th International Scientific Conference on Information, Control, and Communication Technologies (ICCT-2023) (online; October 02-06 2023). – 2023.

[14] Kidiraliev E., Lavrenov R. Stereo Visual System for Ensuring a Safe Interaction of an Industrial Robot and a Human // 7th International Scientific Conference on Information, Control, and Communication Technologies (ICCT-2023) (online; October 02-06 2023). – 2023.

[15] Sultanov R., Lavrenov R., Sulaiman S., Bai Y., Svinin M., Magid E. Object Detection Methods for a Robot Soccer // 7th International Scientific Conference on Information, Control, and Communication Technologies (ICCT-2023) (online; October 02-06 2023). – 2023.

[16] Zagirov A., Chebotareva E., Tsoy T., Martinez-Garcia E. A. A New Virtual Human Model Based on AR-601M Humanoid Robot for a Collaborative HRI Simulation in the Gazebo Environment // 7th International Scientific Conference on Information, Control, and Communication Technologies (ICCT-2023) (online; October 02-06 2023). – 2023.

[17] Dubelschikov A. A., Tsoy T. G., Li H., Magid E. A. Intelligent System Concept of an IoT Cameras Network Application with a Motion Tracking Function for an Unmanned Aerial Vehicle Teleoperation // 7th International Scientific Conference on Information, Control, and Communication Technologies (ICCT-2023). – 2023.

[18] Zagirov A. I., Chebotareva E. V., Tsoy T. G., Martinez-Gracia E. A. A Virtual Human Model Based on Anthropomorphic Robot for Collaborative HRI Simulation in Gazebo Environment // 7th International Scientific Conference on Information, Control, and Communication Technologies (ICCT-2023) (online; October 02-06 2023). – 2023.

[19] Sultanov R. R., Lavrenov R. O., Sulaiman S., Bai Y., Svinin M. M., Magid E. A. Open CV library-based robot detection methods // 7th International Scientific Conference on Information, Control, and Communication Technologies (ICCT-2023) (online; October 02-06 2023). – 2023.

[20] Abdulganeev R., Lavrenov R., Dobrokvashina A., Bai Y., Magid E. Autonomous door opening with a rescue robot. 10th International Conference on Automation, Robotics and Applications (ICARA 2024), 2024.

[21] Tukhtamanov, N., Lavrenov, R., Chebotareva, E., Svinin, M., Tsoy, T., Magid, E. (2022). Open source library of human models for Gazebo simulator. Siberian Conference on Control and Communications,(SIBCON 2022), p. 1-5.